JINR used DIRAC platform to triple speed of scientific tasks implementation

News, 10 February 2023

Since 2016, JINR has created and is developing a service for unified access to heterogeneous distributed computing resources based on the DIRAC Interware open platform, which includes all the main computing resources of JINR. The created platform has already made it possible to accelerate the processing of large sets of jobs by about three times. The speed of computing is provided, among other things, by the integration of the clouds of scientific organizations of the JINR Member States, the cluster of the National Autonomous University in Mexico, and the resources of the National Research Computer Network of Russia, NIKS, which provides access to the network infrastructure to more than 200 organizations of higher education and science. At the moment, the DIRAC-based service is used to solve the tasks of collaborations of all three experiments at the Accelerator Complex of the NICA Megascience Project: MPD, BM@N, and SPD, as well as the Baikal-GVD Neutrino Telescope.

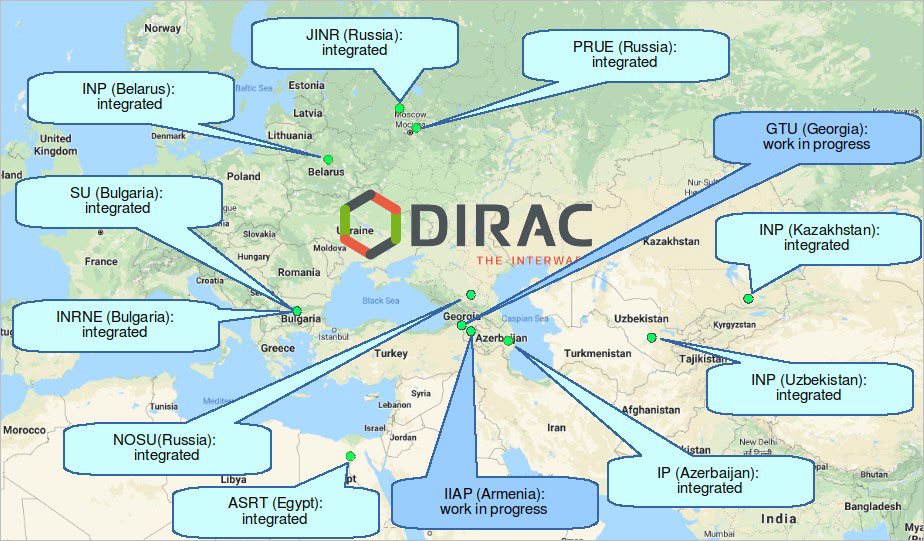

Fig. 1. Clouds of the JINR Member States’ organizations integrated into a distributed information and computing environment based on the DIRAC platform

Fig. 1. Clouds of the JINR Member States’ organizations integrated into a distributed information and computing environment based on the DIRAC platform

“In fact, integration made it possible to combine all the large computing resources of JINR with each other. If there was no integration, users would most likely have to choose one of the resources and configure all workflows for its operation. Using a coherent infrastructure allows one not to be attached to a specific resource, but to use all the resources that are available. For large sets of jobs, this speeds up the execution by about three times,” a researcher of the Distributed Systems Sector at MLIT Igor Pelevanyuk said. He noted that without the DIRAC Interware-based service, the relevant tasks of mass generation of the MPD Experiment data would be considered longer and would completely occupy a separate computing resource for several months.

As of January 2023, thanks to the integration of resources using DIRAC, 1.9 million jobs were completed on the capacities of the distributed platform. The number of calculations performed is estimated at 13 million HEPSPEC2006 days, which is the equivalent of 1,900 years of calculations on one core of the modern central processor. Thus, the average duration of the execution of one task in the system was almost 9 hours.

To predict the speed of calculations, the scientists used the HEPSPEC2006 Benchmark, a programme for checking the processor speed, which measures at what speed the processor performs calculations similar to Monte Carlo data generation. Knowledge of the benchmark results on different resources makes it possible to connect all the processors involved in solving tasks with different computing speeds, and to evaluate the contribution of each of the resources involved in distributed computing.

“The more resources we have, the faster we can do a certain amount of calculations on average. A total of 45% of calculations were processed on Tier1 and Tier2 resources. The Govorun Supercomputer has done about the same amount of work, but we manage to perform those jobs on it that are particularly demanding of RAM and free disk space. Such tasks often cannot be effectively processed on other resources available to us,” Igor Pelevanyuk commented.

The DIRAC Interware at JINR is used mainly where the processing of large amount of calculations is required, which can be divided between tens of thousands of independent jobs. As a rule, for scientific computations where the total amount of calculations is not so large, it is enough for scientists to use one resource. On these resources, part of the computing power is allocated to perform tasks sent to DIRAC. This share is determined in accordance with the policy of a particular resource, its current load and the amount of work that needs to be done in a certain period of time. Thus, the largest shares are at Tier-1 and Tier-2 centres, where two thousand cores are allocated for the DIRAC, up to two thousand cores (depending on the load) are at the Govorun Supercomputer, and at the cloud, where up to 500 cores are allocated for the DIRAC tasks. According to Igor Pelevanyuk, if new experiments that can effectively use the DIRAC Interware-based service appear, it will be easier for them to launch, since the basic schemes of work within the distributed infrastructure have already been developed and tested.

“Many researchers work within one of the infrastructures, i.e. the supercomputer, the cloud, the NICA Computing Cluster, etc. This is enough for a number of jobs, and if there are no prerequisites for a significant increase in the computing load in the future, then, most likely, the transition to the system that we have created will not be required. it is not a universal replacement for standard approaches. However, for complex computational tasks, the created platform provides a new approach that allows one to reach a new level of complexity and increase the amount of resources that can be used for research by an order of magnitude,” the scientist said.

The most active user of the created infrastructure at the moment is the collaboration of the experiment at the MPD Facility. This, one of the two detectors at the NICA Сollider, accounts for 85% of the calculations performed. The current calculations for MPD, while the detector has not started operation yet, are devoted to Monte-Carlo simulation. With the help of special computer programmes that “collide” particles virtually and trace decay products through the matter of the experimental facility, it is possible to debug and configure the operation of reconstruction algorithms and detectors data analysis, while helping to form a scientific programme.

Model data continues to be collected during experimental runs. “We use a set of generators that allows us to create such events, then we run a real experiment and collect two sets of data: real ones from the detector and those generated by us. And if there is no significant difference between them for the selected and well-studied physical process, then the experimental data we have collected correspond to the reality. Together with the set of software created in the experiment for data recovery and analysis, these data can be used to search for new physics.

Some of the jobs in this project were performed by colleagues from Mexico, which in 2019 officially joined the implementation of the NICA Megascience Project. The participation of the computing cluster of the National Autonomous University of Mexico, a collaborator of the MPD Experiment at NICA, showed that the developed service can also be used for the integration of resources, including outside JINR.

The integration of cloud infrastructures of the JINR Member States deserves special attention (Fig. 1). To implement it, it was necessary to develop a special software module that made it possible to integrate cloud resources running on the basis of OpenNebula software into the DIRAC system.

“The integration of external resources is a window of opportunity for other countries that would like to participate in computing for such large scientific collaborations as Baikal–GVD, MPD, SPD, BM@N. If the participants decide to provide part of their contribution with computing, then their resources can be integrated into the existing system, and the question will be how many resources they are able to allocate,” the scientist said.

A series of works “Development and implementation of a unified access to heterogeneous distributed resources of JINR and the Member States on the DIRAC platform” was awarded the Second JINR Prize for 2021 in the nomination “Scientific-research and scientific-technical papers”.

The research was carried out jointly at the Meshcheryakov Laboratory of Information Technologies, Veksler and Baldin Laboratory of High Energy Physics, JINR, and the Center for Particle Physics, Aix-Marseille University ( Marseille, France) by a team of authors: Vladimir V. Korenkov, Nikolay A. Kutovskiy, Valery V. Mitsyn, Аndrey А. Moshkin, Igor S. Pelevanyuk, Dmitry V. Podgainy, Oleg V. Rogachevskiy, Vladimir V. Trofimov, Andrey Yu. Tsaregorodtsev.

About the main results of the work

The integration of computing resources based on the DIRAC platform allows one to include almost any type of computing resources into a unified system and provide them to users through a single web interface, command line interface or programming interface. The relevance of the presented studies and the elaborated approaches is primarily related to the implementation of the experiments of the NICA Megascience Project: BM@N, MPD, SPD. According to the document “TDR MPD: Data Acquisition System” of 2018, the data flow from the MPD Detector will be at least 6.5 GiB/s. For the SPD Experiment, preliminary estimates for the amount of data received are close to 20 GiB/s. Processing, transferring, storing, and analyzing such large arrays of data will require a significant amount of computing and storage resources.

JINR has a large number of different computing resources: the Tier1/Tier2 clusters, the Govorun Supercomputer, the cloud, the NICA Cluster. The resources of each of them can be used to achieve the goals of computing for the NICA experiments. The main difficulty in this case is that these resources are different in terms of the architecture, access and authorisation procedures, and usage methods. To ensure their efficient use, it is necessary, on the one hand, to integrate the resources into a unified system, and on the other hand, not to interfere with their current operation and the performance of other tasks.

To integrate the above heterogeneous resources, it was decided to use the DIRAC Interware platform [1]. DIRAC (Distributed Infrastructure with Remote Agent Control) functions as a middleware between users and various computing resources, ensuring efficient, transparent and reliable use by offering a common interface to heterogeneous resource providers. Initially, the DIRAC platform was developed by the LHCb collaboration for the organization of computing. In 2008, it began to develop as an open-source product designed to organize distributed computing based on heterogeneous computing resources.

The DIRAC platform was deployed at JINR in 2016 in an experimental mode [2]. Typical simulation jobs for the BM@N and MPD experiments, as well as test jobs not related to the experiments, were used to evaluate the platform’s efficiency.

In 2018, work to integrate the cloud infrastructures of JINR and its Member States into the DIRAC-based distributed platform was carried out [3]. This entailed the development of a special module that would allow DIRAC to initiate the creation of virtual machines in the OpenNebula system, on the basis of which the JINR computing cloud and the Member States’ clouds were built. The module was developed by specialists of the Laboratory of Information Technologies and added to the DIRAC source code [4]. At the moment, the developed module is actively used not only at JINR, but is also included in the infrastructures of the BES-III and JUNO experiments. The integration of the clouds of the JINR Member States’ organizations into the DIRAC-based distributed platform (Fig. 1) opens up new opportunities for the Member States to participate in computing for the NICA Megascience Project experiments [3].

Simultaneously with the integration of computing clouds, a solution on the integration of heterogeneous computing resources based on DIRAC for the currently operating BM@N experiment, as well as for the future MPD experiment at the NICA Collider under construction, was worked out. The bandwidths of storage systems on disk and tape drives were studied, stress testing of all the main resources was carried out, approaches to solving standard tasks of data generation, processing and transfer were developed [5,6].

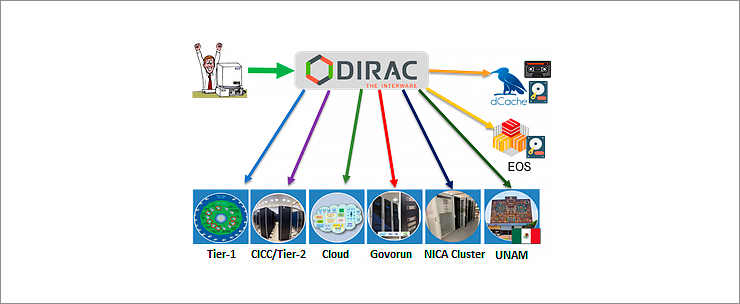

In August 2019, the first set of Monte-Carlo data simulation jobs for the MPD experiment was sent to the resources of the Tier1 and Tier2 grid clusters via DIRAC. Then the Govorun Supercomputer was integrated into the distributed computing platform (DCP). In the summer of 2020, the NICA Cluster and the National Autonomous University of Mexico (UNAM) Cluster were added. dCache, which manages disk and tape storages and EOS were integrated as storage systems. It is necessary to note that the UNAM Cluster became the first computing resource located outside Europe or Asia and included in the DIRAC infrastructure at JINR. Scheme of the integration of geographically distributed heterogeneous resources based on the DIRAC Interware is shown in Fig.2.

Fig. 2. Scheme of the integration of geographically distributed heterogeneous resources based on the DIRAC

Fig. 2. Scheme of the integration of geographically distributed heterogeneous resources based on the DIRAC

The created distributed computing platform enables load distribution across all the resources involved, thereby accelerating calculations and enhancing the efficiency of using integrated resources [6]. The Govorun Supercomputer can serve as an example. Depending on its loading by various types of tasks, it became possible to allocate temporarily available resources to solving tasks related to the experiments of the NICA Megascience Project [7].

The DIRAC-based distributed platform is successfully used by MPD not only for job management, but also for data management [8]. All data are registered in the file directory, and metainformation is assigned to some of them. Owing to it, one can select files by such characteristics as collision energy, beam composition, generator name, etc.

The MPD example and the experience gained in the process of introducing new approaches made it possible to propose the DIRAC-based distributed computing platform at JINR as a standard solution for the mass launch of tasks. The DCP was used by the Baikal-GVD experiment, one of the key experiments of the JINR neutrino programme. The data obtained were used for studies on the observation of muon neutrinos and were reported at a specialised conference [9]. The BM@N Experiment tested DIRAC to launch jobs related to the primary processing of data obtained at the detector and successfully completed 22 thousand simulation jobs.

The participation in voluntary computing related to the study of the SARS-CoV-2 virus within the Folding@Home [9] project became another area of using the DIRAC platform at JINR. Cloud resources of both the Institute and its Member States’ organizations that are free from JINR’s major activity are successfully involved in the COVID-2019 research. The contribution of all the cloud infrastructures is taken into account both in the Folding@Home information system within the “Joint Institute for Nuclear Research” group, and in the frame of the accounting system of DIRAC itself.

To date, thanks to resource integration using DIRAC, 1.9 million tasks have been completed on the distributed platform. The number of calculations performed is estimated at 13 million HEPSPEC2006 days, which is equivalent to 1900 years of computing on one core of the central processor. 90% of all calculations were performed on the Govorun Supercomputer, Tier1 and Tier2, 6% on the JINR cloud infrastructure, 4% on the other resources. Initially, the DIRAC platform was developed by the LHCb collaboration to organize computing.

The major user of the distributed platform is the MPD experiment, which accounts for 85%. Using DIRAC, a programme of mass data simulation runs within the MPD experiment is performed. More than 1.3 billion events were successfully modeled with the help of the UrQMD, GSM, 3 Fluid Dynamics, vHLLE_UrQMD, and other generators, 439 million events were subsequently reconstructed. The total amount of data received exceeds 1.2 PB. 7% of calculations were performed by the Baikal-GVD experiment, 5% by SPD experiment, 2% by BM@N, 1% by Folding@Home.

In addition to the ability to perform the mass launch of jobs, the integration of a large number of heterogeneous resources opened up the possibility of their centralised analysis. An approach to evaluate the performance of different computing resources was developed, based on the results of its implementation a method for assessing on top of user tasks, rather than artificial tests, was proposed [10]. Now it is possible to qualitatively evaluate the performance of different resources. In some cases, this made it possible to understand when a resource was being used inefficiently. It was also found that the standard DIRAC Benchmark underestimated performance for some processor models, which we reported to the developers of this benchmark.

With the growing use of the distributed computing platform, it was necessary to create additional services for users. One of the services is related to performance evaluation at the level of a specific user job, which allows the user to better understand the modes of using computing resources, the network and RAM. It also enables the early detection of problems in software packages used for data processing. The second service was specially developed for the MPD Experiment. It provides users with a job launching interface designed not in terms of the number of tasks, executable processes, passed arguments, but in physical terms, i.e. the number of events, generator, energy, beam composition [11]. Thus, physicists are provided with an interface that relies on concepts closer to them. This is the first example of creating a problem-oriented interface within the DIRAC infrastructure at JINR. In the future, it is possible to develop similar interfaces for other scientific groups of this distributed resource platform.

The uppermost results are:

- A module for the integration of cloud resources was developed and implemented based on the OpenNebula software. Using the developed module, the clouds of JINR and its Member States were combined to perform joint computing. The joint infrastructure of JINR and its Member States was used to participate in the Folding@Home project to study the SARS-CoV-2 virus, as well as to launch simulation jobs for the Baikal-GVD experiment.

- The integration of computing resources into DIRAC, i.e. Govorun Supercomputer, Tier1, Tier2, NICA cluster, JINR cloud, JINR Member States’ clouds, UNAM cluster was carried out. The Integration of storage resources: EOS disk storage, dCache tape storage. The workflows of mass data simulation within the MPD experiment were adapted to launch tasks and save data using the DIRAC platform. Since 2019, this platform has been used to implement the programme of mass data simulation runs of the MPD Experiment.

- A new approach to analysing the performance of distributed heterogeneous computing resources. The application of this approach made it possible to define the performance of computing resources integrated into DIRAC.

List of articles:

- Korenkov, V., I. Pelevanyuk, P. Zrelov, and A. Tsaregorodtsev. 2016. “Accessing Distributed Computing Resources by Scientific Communities using DIRAC Services.” CEUR workshop proceedings. Vol. 1752. p. 110-115.

- Gergel, V., V. Korenkov, I. Pelevanyuk, M. Sapunov, A. Tsaregorodtsev, and P. Zrelov. 2017. Hybrid Distributed Computing Service Based on the DIRAC Interware. Communications in Computer and Information Science. Vol. 706. doi:10.1007/978-3-319-57135-5_8

- Balashov, N. A., N. A. Kutovskiy, A. N. Makhalkin, Y. Mazhitova, I. S. Pelevanyuk, and R. N. Semenov. 2021. “Distributed Information and Computing Infrastructure of JINR Member States’ Organizations.”. AIP Conference Proceedings 2377, 040001 (2021); https://doi.org/10.1063/5.0063809

- Balashov, N. A., R. I. Kuchumov, N. A. Kutovskiy, I. S. Pelevanyuk, V. N. Petrunin, and A. Yu Tsaregorodtsev. 2019. “Cloud Integration within the Dirac Interware.”. CEUR workshop proceedings. Vol. 2507. p. 256-260.

- Korenkov, V., I. Pelevanyuk, and A. Tsaregorodtsev. 2019. “Dirac System as a Mediator between Hybrid Resources and Data Intensive Domains.”. CEUR workshop proceedings. Vol. 2523. p. 73-84.

- Korenkov, V., I. Pelevanyuk, and A. Tsaregorodtsev. 2020. Integration of the JINR Hybrid Computing Resources with the DIRAC Interware for Data Intensive Applications. Communications in Computer and Information Science. Vol. 1223 CCIS. doi:10.1007/978-3-030-51913-1_3

- Belyakov, D. V., A. G. Dolbilov, A. N. Moshkin, I. S. Pelevanyuk, D. V. Podgainy, O. V. Rogachevsky, O. I. Streltsova, and M. I. Zuev. 2019. “Using the “Govorun” Supercomputer for the NICA Megaproject.”. CEUR workshop proceedings. Vol. 2507. p. 316-320.

- Kutovskiy, N., V. Mitsyn, A. Moshkin, I. Pelevanyuk, D. Podgayny, O. Rogachevsky, B. Shchinov, V. Trofimov, and A. Tsaregorodtsev. 2021. “Integration of Distributed Heterogeneous Computing Resources for the MPD Experiment with DIRAC Interware.” Physics of Particles and Nuclei 52 (4): 835-841. doi:10.1134/S1063779621040419

- Kutovskiy, N. A., I. S. Pelevanyuk, and D.N. Zaborov. 2021. “Using distributed clouds for scientific computing”. CEUR workshop proceedings. Vol. 3041. p. 196-201.

- Pelevanyuk, I. 2021. “Performance Evaluation of Computing Resources with DIRAC Interware.”. AIP Conference Proceedings 2377, 040006 (2021); https://doi.org/10.1063/5.0064778

- Moshkin, A. A., I. S. Pelevanyuk, and O. V. Rogachevskiy. 2021. “Design and development of application software for the MPD distributed computing infrastructure”. CEUR workshop proceedings. Vol. 3041. p. 321-325.